Modeling the Himalaya Watershed Requires More Powerful Computers

Humans have imagined great breakthroughs in science and technology decades and even centuries before technological progress made them possible. Often times, people or organizations have had to step up to the plate and provide funds to make projects become a reality. If the United States hopes to keep up in science, engineering and technology, it will need to provide funds to help scientists remain in the Exaflop Barrier race.

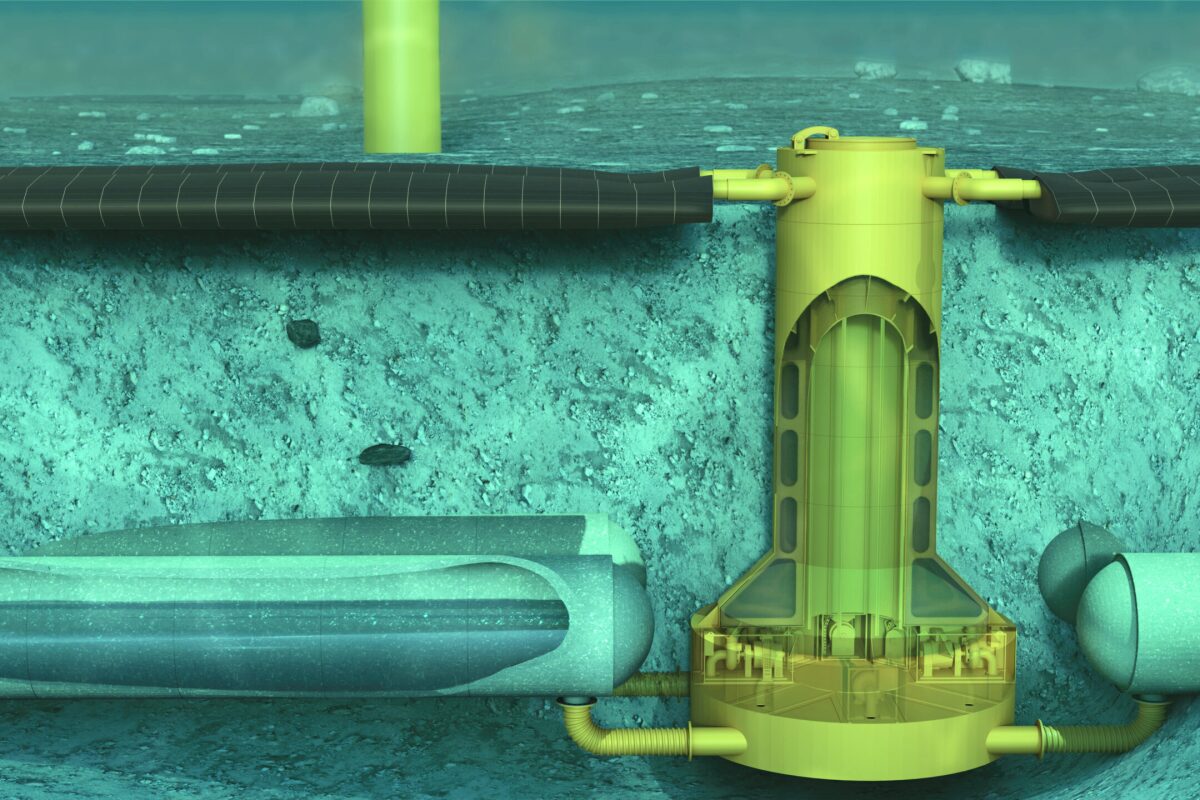

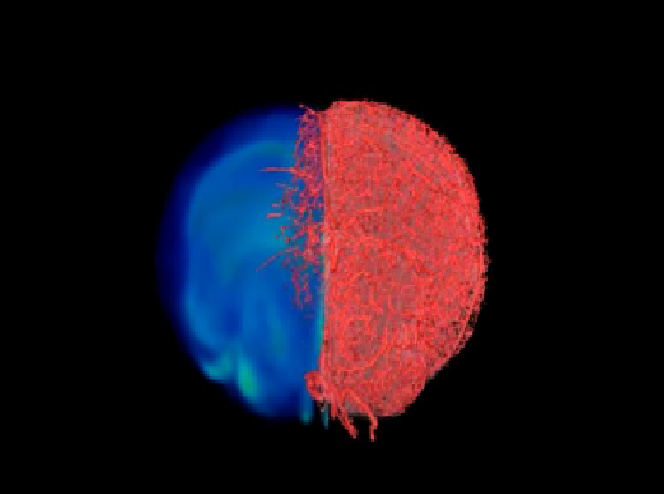

Solving some of today’s great challenges, like more accurately modeling the Himalaya watershed, understanding how hurricanes form, determining how genes work on the molecular level and understanding how brain synapses work, will require vastly more computing power than is currently available.

Recent History of Advances in Computing Power

The United States Department of Energy (DOE) funded supercomputer research leading to the first teraflop computer in 1997 and petaflop computer in 2008. The next great barrier is the exaflop and it is not clear whether the DOE has the funds needed to meet the challenge. According to scientists, the current barrier to creating exaflop computers is that processors use too much energy and become too hot.

Technical Challenges of Getting to Exaflop

A 1 petaflop computer currently uses 3MW of power; a “scaled up” 1 exaflop computer would require 200MW of power, meaning a power plant would be required to run it. New designs, scientists believe, will allow a 1 exaflop computer by 2018 that will require 20 MW of power.

New designs will be based on miniature processors like those found in cell phones of which there will be billions. New software and algorithms will be designed to run the processors in the most efficient way so that “communication,” the origin of heat, will be minimized.

Cloud Computing Not Fast Enough

Unfortunately, the cloud is 50% slower than supercomputers and running complex projects in the cloud becomes very expensive. As a result, scientific computing centers, like the US Department of Energy, or at universities, are the best places to do research to vastly improve computer speed.

China & Japan Investing Heavily in Supercomputing

Exaflop computing breakthroughs may not happen first in the United States. China has a 55-petaflop “Milky Way 2” computer that runs twice as fast as the DOE’s 27-petaflop Oak Ridge National Lab, Titan. And Japan has just invested $1 billion to build an exascale computer by 2020.

For more information, visit US DOE’s Office of Science. The video below shows supercomputers Jaguar and Kraken at the University of Tennessee’s National Institute of Computational Science, NICS.

Related articles on IndustryTap:

- Identity, Privacy & Security Under Siege Via Ubiquitous Computing Technology

- HP Introduces Sprout, a New Desktop Computing Experience for a Complex World

- Can the Human Brain Even Comprehend the Power of Quantum Computing?

References and related content: