Let’s discuss BCI (Brain-Computer Interface). The definition given is as follows: “devices that enable its users to interact with computers by mean of brain-activity only”.

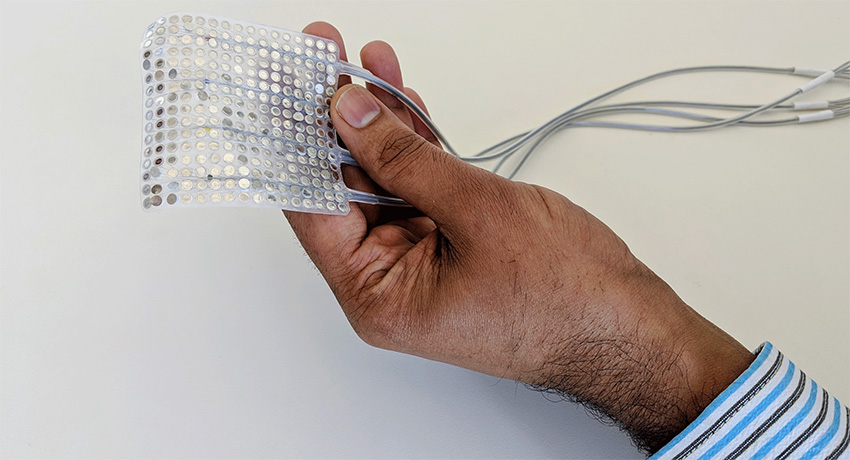

First, let’s step back in time to recognize some fascinating accomplishments in BCI history: In 1924 Hans Berger, a German neuroscientist, discovers the electrical activity of the human brain with EEG (Electroencephalography), a method to record the electrical activity generated by the brain via electrodes placed on the scalp surface.

Then in 1977, experiment Vidal was the first application of BCI where EEG was used to control a cursor-like graphical object on a computer screen.

Scientists from the University of California, San Francisco, designed a system (Computational Neural Networks) that simulates brain signals into a virtual voice – the research pivoted around restoring speech to people with paralysis or neurological damage. They published their paper in the scientific journal “Nature”.

This brings us to Computational Neural Networks, defined as follows: “A neural network is a type of machine learning which models itself after the human brain. This creates an artificial neural network that via an algorithm allows the computer to learn by incorporating new data” –a type of router network overlay learning new routing tables / IP tables from added nodes in a self-healing configuration.

Presently, parts of the anatomy are used to “trigger” computational simulation of words e.g. blinking of an eye, tapping of the hand/finger or in the case of late Stephen Hawking, a cheek muscle.

However, this is not the full picture. The human brains contain about 86 billion nerve cells called neurons, each individually linked to other neurons by way of connectors and every time, we think, move or feel, neurons are at work. Basically, our brain is a mini powerplant generating small electric signals that moves from neuron to neuron.

But part of this activity can be divided into two categories:

- Thought without speech (keeping your thoughts to yourself – uses other signals yet to be explored by scientists)

- Verbal thoughts (physical speech)

Scientists from the University of California are using a brain-computer interface (BCI), which works out a person’s speech intentions by matching brain signals to physical movements (Category 2). This is achieved by letting the volunteers read, sing and count. By studying the volunteer’s larynx, tongue, jaw and lips and the associated brain signals, they can produce a virtual